Having had some time to reflect on the Build keynote yesterday the idea that resonated with me the most was that we have, for the first time in our short CS history, a platform that enables us to create autonomous and intelligent applications using widely accessible tools. Over the past few years we have been surpassed by intelligent applications (Deep Blue, AlphaGo), however, as a developer I am less concerned with how applications can defeat us and more concerned with how they can intuitively and independently assist in everyday life.

To that end I wanted to delve a little more deeply into the following Microsoft Cognitive Services:

- Language Understanding Intelligent Service API - Understand language contextually, so your app communicates with people in the way they speak.

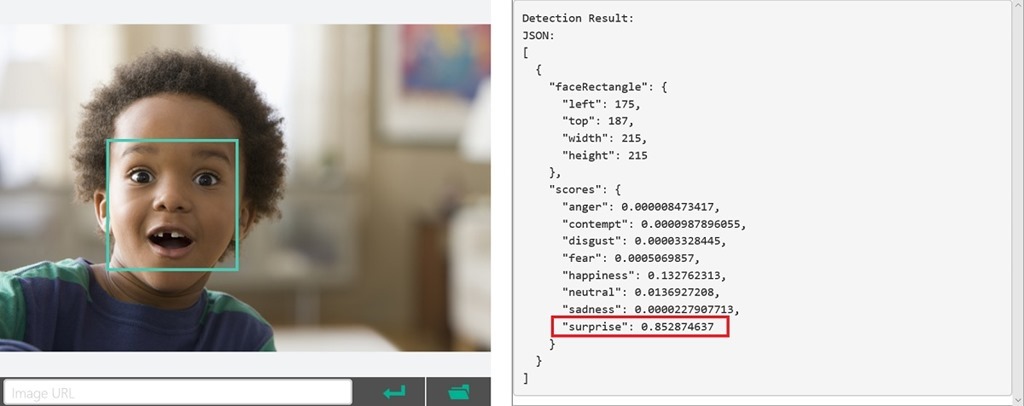

- Emotion API - Create apps that respond to moods, recognize feelings, and get personal with the Emotion API. Using facial expressions, this cloud-based API can detect happiness, neutrality, sadness, contempt, anger, disgust, fear, and surprise. The artificial intelligence algorithms detect these emotions based on universal facial expressions, functioning even cross-culturally.

- Text Analytics API - Use a few lines of code to easily analyze sentiment, extract key phrases, detect topics, and detect language for any kind of text (across 120 languages).

These services have existed in the wild for a while under seemingly benign and amusing guises like How-Old.net, where we have happily provided Microsoft with a plethora of test images to hone its perceptual intelligence capabilities. Well now there is enough collective intelligence for a platform that apparently rivals Watson.

Emotion Recognition API

As we have come to expect these APIs are indeed RESTful, and this Emotion API example accepts either an image (JPEG, PNG, GIF, BMP) or a video, so a POST would look something like this:

POST https://api.projectoxford.ai/emotion/v1.0/recognize HTTP/1.1

Content-Type: application/json

Host: api.projectoxford.ai

Content-Length: 43

{ "url": "http://poppastring.com/mypic.jpg" }

In response you have a JSON output that shows the number of faces detected and assigns a score to the most likely emotion:

[

{

"faceRectangle": {

"left": 68,

"top": 97,

"width": 64,

"height": 97

},

"scores": {

"anger": 0.00300731952,

"contempt": 5.14648448E-08,

"disgust": 9.180124E-06,

"fear": 0.0001912825,

"happiness": 0.9875571,

"neutral": 0.0009861537,

"sadness": 1.889955E-05,

"surprise": 0.008229999

}

}

]

This is essentially a new kind of signal that your devices or application were otherwise oblivious to, to this point the only way to express frustration with your computer likely involved banging the keyboard, now these cues could be detected in less destructive ways. Also I think it is going to be interesting to see what additional disciplines (Sociology, Psychology, Anthropology) get folded into Software Engineering. Much like Art, Design and User Experience are integral to parts of our sprint, we may also be asking about the emotional connection our services are creating.

Check out the Open API testing console here!

Comments are closed.